OpenAI’s 2026 Ruling: LinkedIn Users’ AI Risks Exposed

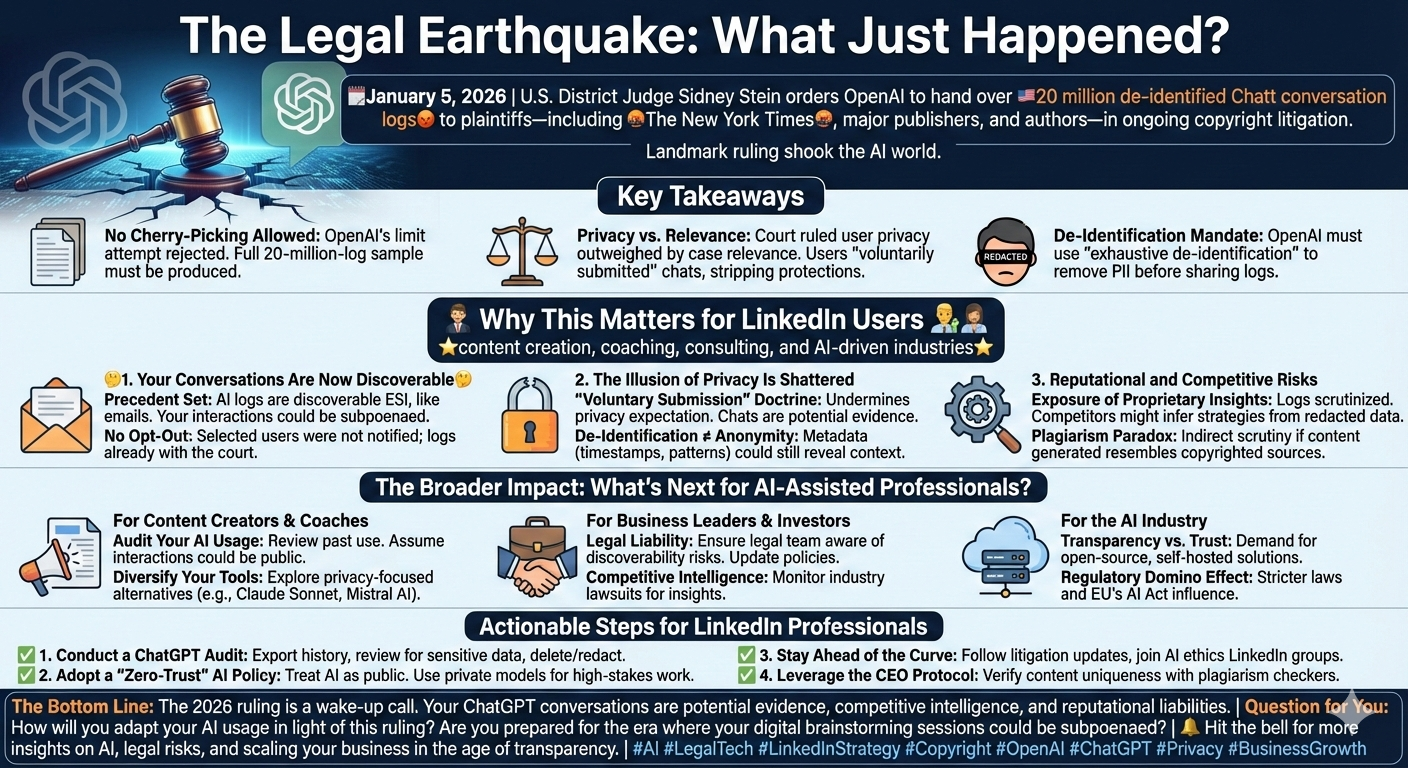

The Legal Earthquake: What Just Happened?

On January 5, 2026, a landmark ruling shook the AI world. U.S. District Judge Sidney Stein ordered OpenAI to hand over 🇺🇲20 million de-identified ChatGPT conversation logs to plaintiffs—including The New York Times, major publishers, and authors—as part of its ongoing copyright litigation. This decision, finalized in early January, marks a turning point in how AI-generated content is scrutinized and how user data is treated in legal battles.

Key Takeaways:

– No Cherry-Picking Allowed: OpenAI’s attempt to limit the logs using keyword searches was rejected. The full 20-million-log sample must be produced.

– Privacy vs. Relevance: The court ruled that user privacy interests were outweighed by the logs’ relevance to the case. Judge Stein noted that users “voluntarily submitted” their conversations to OpenAI, stripping them of the same protections as, say, wiretapped recordings.

– De-Identification Mandate: OpenAI must use its “exhaustive de-identification” tools to remove personally identifiable information (PII) before sharing the logs.

Why This Matters for LinkedIn Users?

LinkedIn professionals—especially those in content creation, coaching, consulting, and AI-driven industries —have been leveraging ChatGPT for everything from drafting posts to brainstorming business strategies.

But the 2026 ruling introduces three critical risks for users:

1. Your Conversations Are Now Discoverable

– Precedent Set: The ruling establishes that AI conversation logs are discoverable electronically stored information (ESI), akin to emails or internal company messages. This means your ChatGPT interactions could be subpoenaed in future litigation—whether you’re directly involved or not.

– No Opt-Out: Users whose logs are part of the sample were not notified and cannot opt out. If your conversations are selected, they’re already in the hands of the court.

2. The Illusion of Privacy Is Shattered

– “Voluntary Submission” Doctrine: The court’s argument that users “voluntarily submitted” their data to OpenAI undermines the expectation of privacy. Even if you assumed your chats were confidential, they’re now treated as potential evidence.

– De-Identification ≠ Anonymity: While OpenAI must strip PII, metadata (e.g., timestamps, conversation patterns) could still reveal sensitive context—especially for professionals discussing proprietary strategies or client details.

3. Reputational and Competitive Risks

– Exposure of Proprietary Insights: If you’ve used ChatGPT to refine your LinkedIn content, business models, or coaching frameworks, those logs could be scrutinized. Competitors or litigants might infer your strategies, even if your name is redacted.

– Plagiarism Paradox: The plaintiffs are testing whether ChatGPT reproduces copyrighted content. If your LinkedIn posts or professional materials were generated with ChatGPT, you could face indirect scrutiny—especially if your content resembles copyrighted sources.

The Broader Impact: What’s Next for AI-Assisted Professionals?

For Content Creators & Coaches

– Audit Your AI Usage: Review how you’ve used ChatGPT for LinkedIn posts, client deliverables, or internal documents. Assume those interactions could become public.

– Diversify Your Tools: Explore privacy-focused AI alternatives (e.g., locally hosted models) for sensitive work. Tools like Claude Sonnet or Mistral AI may offer stronger data protections.

For Business Leaders & Investors

– Legal Liability: If your team uses AI for strategic planning or proprietary research, ensure your legal team is aware of the discoverability risks. Update NDAs and data-handling policies accordingly.

– Competitive Intelligence: Your competitors’ AI-generated strategies could soon be indirectly accessible through litigation. Monitor industry lawsuits for insights.

For the AI Industry

– Transparency vs. Trust: This ruling forces a reckoning: Can users trust AI platforms with sensitive data? Expect a surge in demand for open-source, self-hosted AI solutions where users control their logs.

– Regulatory Domino Effect: Governments may impose stricter data retention and disclosure laws for AI companies. The EU’s AI Act could serve as a blueprint for global standards.

Actionable Steps for LinkedIn Professionals

1. Conduct a ChatGPT Audit:

– Export your ChatGPT history (if available) and review for sensitive discussions.

– Delete or redact conversations involving client data, unreleased strategies, or proprietary content.

2. Adopt a “Zero-Trust” AI Policy:

– Treat AI tools like public forums. Avoid inputting confidential information unless absolutely necessary.

– Use private or air-gapped AI models for high-stakes work.

3. Stay Ahead of the Curve:

– Follow updates on the OpenAI litigation and similar cases (e.g., The New York Times v. OpenAI).

– Join LinkedIn groups focused on AI ethics and legal risks to share insights with peers.

4. Leverage the CEO Protocol:

– If you’re using AI for LinkedIn content, ensure your posts comply with copyright and originality standards. Use tools like Copyscape or Grammarly’s plagiarism checker to verify uniqueness.

The Bottom Line

The 2026 ruling isn’t just about OpenAI—it’s a wake-up call for every professional who’s integrated AI into their workflow. Your ChatGPT conversations are no longer private assets; they’re potential evidence, competitive intelligence, and reputational liabilities.

Question for You:

How will you adapt your AI usage in light of this ruling?

Are you prepared for the era where your digital brainstorming sessions could be subpoenaed?